Why I Built Polos: Durable Execution for AI Agents

When I started building AI agents, getting a demo working was easy. But once we put them in production, things got complicated fast.

We needed a bunch of infra:

Kafka to pass events between agents

Retry logic - but if an agent fails halfway through, you can’t just restart it. It may have already charged the customer or sent an email.

Concurrency control so we didn’t blow through our OpenAI quota

Observability to actually see what the agents were doing

We ended up bolting together Kafka, durable execution frameworks, a bunch of heavyweight infrastructure - just to run agents reliably. And then we were stuck operating all of it. Time we should’ve spent building the actual product!

I realized every team building agents hits the same wall. We’re missing an AI-native platform that handles this out of the box.

That’s why I built Polos.

What is Polos?

Polos is a durable execution platform for AI agents. It gives you stateful infrastructure to run long-running, autonomous agents reliably at scale - with a built-in event system, so you don’t need to bolt on Kafka or RabbitMQ.

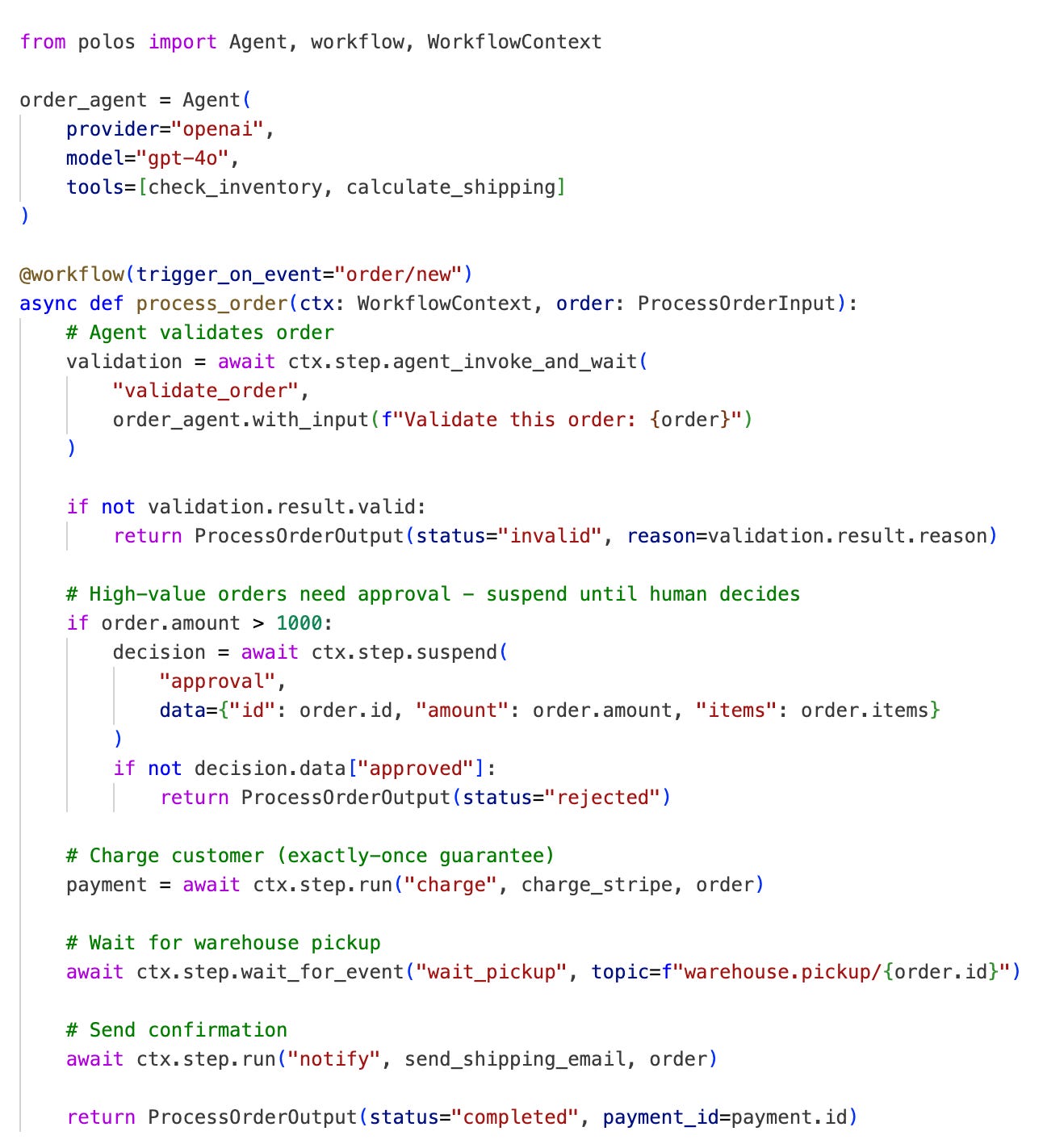

You write plain Python or TypeScript. No DAGs, no graph syntax. Polos handles the durability, the retries, the coordination.

This workflow survives crashes, resumes mid-execution, and pauses for approval - with zero manual checkpointing.

What Polos Gives You

Durable state. If your agent crashes on step 18 of 20, it resumes from step 18. Not step 1. Every side effect like LLM calls, tool executions, API requests is checkpointed. If your agent already charged Stripe via a tool call before the crash, that charge isn’t repeated on resume. Polos replays the result from its log. No wasted LLM calls, no duplicate charges, no double-sends.

Global concurrency. System-wide rate limiting so one rogue agent can’t exhaust your entire API quota. Queues and concurrency keys give you fine-grained control.

Human-in-the-loop. Pause execution for hours or days, wait for a user signal or approval, and resume with full context. Paused agents consume zero compute.

Exactly-once execution. Charging Stripe, sending an email - all actions happen once, even if you retry the workflow. Polos checkpoints every side effect.

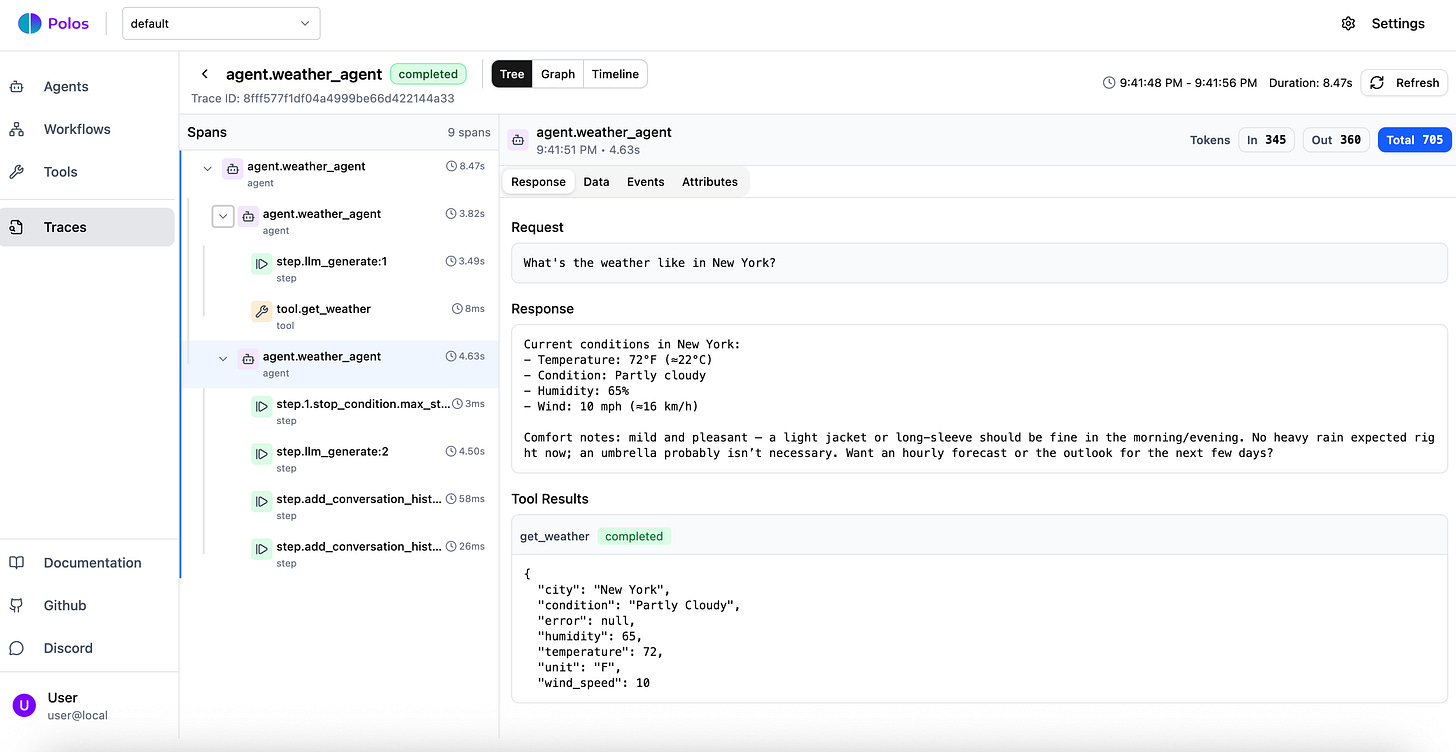

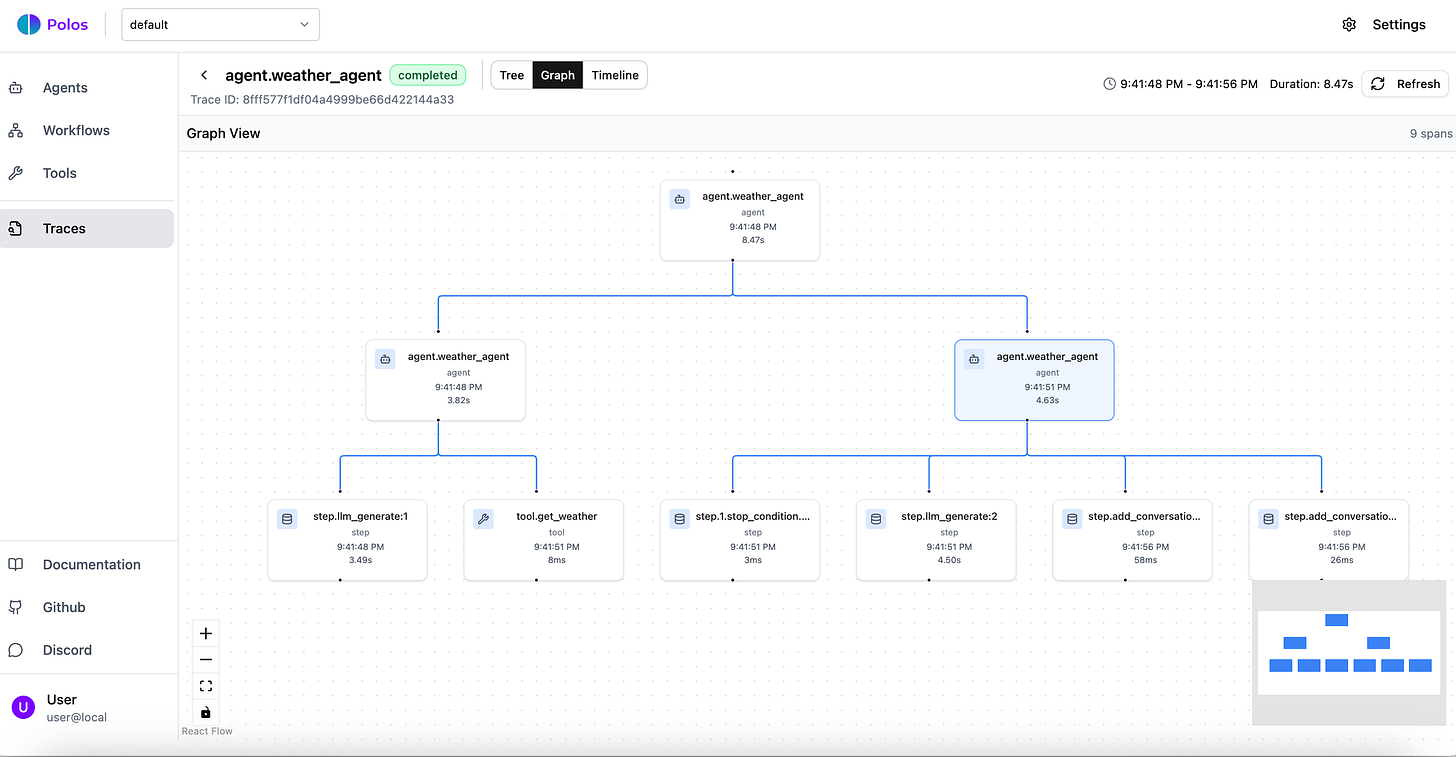

Built-in observability. Trace every tool call, every decision. See why your agent chose Tool B over Tool A.

See It In Action

Here’s a short demo showing crash recovery in practice:

We start an order workflow. The agent charges the customer via Stripe - the charge succeeds, and Polos checkpoints the result.

Since the order amount is flagged as unusual, the workflow suspends for a fraud review.

While waiting for the fraud team, the worker crashes.

In most frameworks, this workflow is dead. You’d need to handle the failure manually and risk charging the customer again.

With Polos, we simply start a new worker. When the fraud team approves, Worker 2 picks up the workflow exactly where it left off.

Stripe is not called again - Polos replays the result from its log. The confirmation email is sent, and the workflow completes.

How It Works

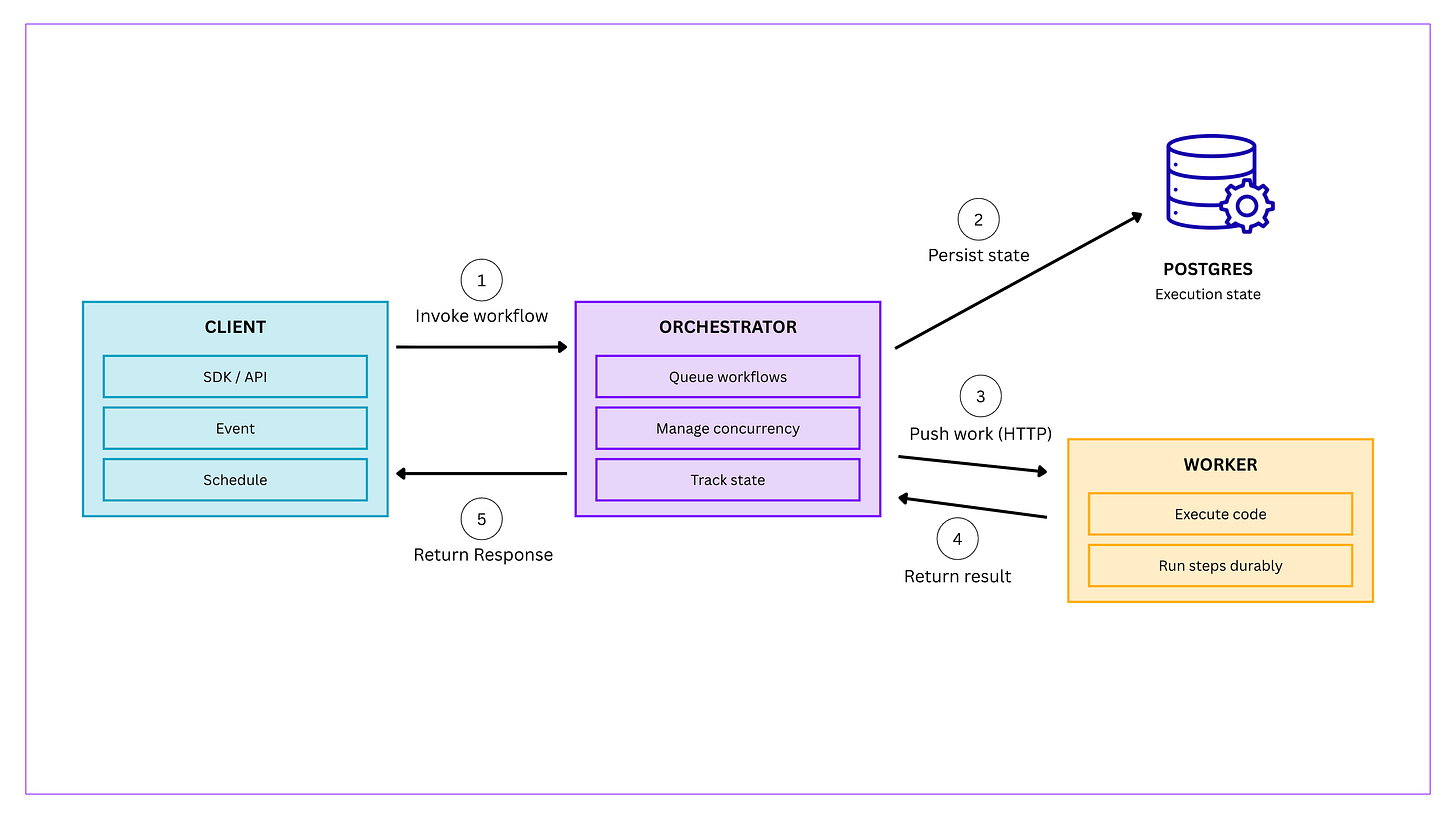

Polos has three components:

Orchestrator: manages workflow state, persists every side effect to a durable log, handles event routing, scheduling, and concurrency control. If a worker dies, the orchestrator knows exactly where execution left off and schedules the workflow on a different worker.

Workers: run your code. They connect to the orchestrator, pick up workflow steps, execute them (including LLM calls and tool invocations), and report results back. Workers are stateless and horizontally scalable.

SDK: what you import in your code. Provides the @workflow decorator, Agent class, and the WorkflowContext that gives you durable steps, suspend/resume, events, and concurrency primitives.

Under the hood, Polos captures the result of every side effect - tool calls, API responses, time delays - as a durable log. If your process dies, Polos replays the workflow from the log, returning previously-recorded results instead of re-executing them. Your agent’s exact local variables and call stack are restored in milliseconds.

Completed steps are never re-executed - so you never pay for an LLM call twice.

Get Involved

Polos is open source: github.com/polos-dev/polos

Star us on GitHub, join the Discord, and give it a spin. We’re building this in the open and would love your feedback and contributions.